The invertible matrix theorem

What is an invertible matrix

What does it mean for a matrix to be invertible? Throughout past lessons we have already learned that an invertible matrix is a type of square matrix for which there is always another one (called its inverse) which multiplied to the first, will produce the identity matrix of the same dimensions as them.

In other words, the matrix is invertible if and only if it has an inverse matrix related to it, and when both of them are multiplied together (no matter in which order), the result will be an identity matrix of the same order. During this lesson we will discuss a list of characteristics that will complement this invertible matrix definition, this list is what we call the invertible matrix theorem.

But before let us go through a quick review on how to invert a 2x2 matrix to explain this concept a little better, we will call this 2x2 matrix . Remember from past lessons that is said to be an invertible 2x2 matrix if and only if there is an inverse matrix which multiplied to produces a 2x2 identity matrix, which is mathematically defined in the condition:

Inverting matrix is quite the simple task, but if we want to work with inverses of higher order matrices we have to remember that the condition shown in equation 1 still holds when n is higher than 2, and so, in general, we know we can invert a matrix of dimensions which we define as if the following condition is met:

Keep always in mind that there is a difference between an invertible matrix and an inverted matrix. And invertible matrix is any matrix which has the capacity of being inverted due to the type of determinant it has, while an inverted matrix is one which has already passed through the inversion process. If we look at equation 2, would be referred as the invertible matrix and would be the inverted matrix. This is just to denote the matrices are not the same, and so, while working through problems it is important to remember which is your original one.

On the next section we will learn how to tell if a matrix is invertible and when is a matrix not invertible by using the invertible matrix theorem.

What is the invertible matrix theorem

So what is the Invertible matrix theorem? This theorem states that if is a square matrix, then the following statements are equivalent:

- is an invertible matrix.

- is row equivalent to the identity matrix.

- has n pivot positions.

- The equation has only the trivial solution.

- The columns of form a linearly independent set.

- The equation has at least one solution for each in .

- The columns of span .

- The linear transformation → maps onto .

- There is an matrix such that .

- There is an matrix such that .

Therefore, if all of these statements are equivalent, the rule is that for a given matrix A the statements are either all true or all false. The full invertible matrix theorem contains more than these 10 statements, but we have selected those which are most commonly used.

In a nutshell, the invertible matrix theorem is just a set of statements describing the properties a matrix either has or not, and once one of them applies to a given matrix, all of the others should follow because they are either consequences or requirements for all of the other statements to be true.

Instead of going through the process of how to invert a matrix, this theorem allows us to know if inverting a 2x2 matrix (or any other square matrix for that matter) is possible or not beforehand, sometimes saving us from tedious calculations. For this reason, the next section will be dedicated to describe the invertible matrix theorem explained through each of the 10 selected statements for this lesson.

Invertible matrix theorem explained

As mentioned before, the invertible matrix theorem is just a set of many statements which buildup on each other based on the fact that a matrix is either invertible or not. Therefore during this section we will go over each of the ten statements we have selected for our lesson (remember, there are many more!) and say a little bit about them in order to try and explain them or at least put them into context.

During the final section of this lesson, we will look at some proofs with a few exercise problems we will work through.

1. A is an invertible matrix.

At this point you are very familiarized with an invertible matrix, we even dedicated the first section of this lesson to define it again (as it has been done in a few lessons before). The fact that a matrix is either invertible or not, will be the first step that unchains many other characteristics that can be attributed to the matrix if it is invertible, or that the matrix will not have if it is not invertible.

So if a square matrix named is indeed invertible (there exists another square matrix of the same size which multiplied to will produce the identity matrix of the same size as them), then all of the next statements are true for this matrix (and its inverse, for that matter).

2. is row equivalent to the identity matrix.

A row equivalent set of matrices are those in which one can be row reduced into the other one using the the three types of matrix row operations. Therefore, if a matrix is invertible, it means that it can be reduced into its reduced echelon form which will be the identity matrix of the same size.

3. has n pivot positions.

This statement is easily understandable after statement number two: once you have reduced the original provided matrix into its reduced echelon form, you will have the same amount of pivots as you have columns (and rows) in the square matrix.

4. The equation has only the trivial solution.

Statement 4 is just a consequence of our next one.

Remember that for a collection of vectors (which can be squeezed together into a matrix) to be linearly independent, it means that the result from the matrix equation will be a trivial solution.

In other words, the vector that is being multiplied to the square matrix is a vector with all zero components (is a zero vector!), and that is why this matrix equation of is equal to zero (so is not all zeros!). And the conclusion is our next statement:

5. The columns of form a linearly independent set.

Providing that the solution to the matrix equation results in x being a zero vector, we conclude that the column vectors contained in matrix are all linearly independent from each other, thus, are a linearly independent set.

This becomes clear from statement 2 where we say that all of the invertible matrices can be row reduced into the identity matrix of its same size, because it means you can isolate the value of the variables contained in those contained vectors.

Simply said, the column vectors contained within the given matrix are not multiples of each other and are not from a linear combination of each other in any way. That is how you can obtain the identity matrix while row reducing, because you can isolate all of the different variables from the vectors inside the matrix and obtain their straightforward solution value when solving a matrix equation (through forming an augmented matrix and then row reducing) without having to transcribe the reduced echelon form of the matrix into a system of equations to finish solving by substitution.

6. The equation has at least one solution for each in .

This is what we just explained in our past statement. Given that a matrix is invertible, it means it can be thought as a system of equations in which you can solve all of the final values of their variables through row reduction into reduced echelon form without the need for further computation afterwards.

In order to find the values of the variables from such a system, we are to solve the matrix equation , and in this equation we are for find at least one solution for each variable (components on the vector) just as when is zero ( but remember, if we go back to statement 4 where and all of its components are zero, when b is different than zero, then we will obtain different values for the components of ).

7. The columns of span .

Statement seven says that the column vectors contained in a given square matrix , which is invertible, must span in the coordinate vector space . This is understandable, remember is the amount of columns of , therefore: this statement means that the vectors in the matrix always contain the same amount of different variables (and thus, they will comprise through the same amount of dimensions and require the same different dimensional planes in the real coordinate space Rn to be graphically represented) as the amount of columns contained in the matrix. In other words, if matrix is a 2x2 matrix, then it means the column vectors are two-dimensional and span in a two-dimensional real coordinate space , or, if matrix is a 3x3 matrix, then it means the column vectors are three-dimensional and span in a three-dimensional real coordinate space .

8. The linear transformation → maps onto .

Statement 8 is just a straight consequence of statement 7. This basically means that if you have a vector with amount of components (basically, n number of entries in the vector), the transformation will result in a column vector with amount of entries too, and thus it can be mapped in the real coordinate space of n dimensions .

In other words, if is a vector with two components (2 entries), the linear transformation can only be performed with being an invertible matrix 2x2 (due to matrix multiplication requirements) and the result will be a vector with two components too, therefore, it can be mapped in .

If the vector contains three components (3 entries), the linear transformation can only be performed with being a 3x3 matrix and the result will be a vector with three components too, therefore, it can be mapped in .

9. There is an matrix such that .

Statement 9 tells us that since is an invertible matrix, then there must be a matrix which multiplied to matrix will produce the identity matrix of the same size as . This is due the condition of invertibility that we have seen in past lessons.

10. There is an matrix such that .

As we saw in our lesson about the inverse of a 2x2 matrix, if a matrix has an inverse, the multiplication of such inverse and the original matrix will produce the identity matrix of the same size as the original square matrix. The condition is shown in the next equation:

This means that the matrix from statement 9 is equal to matrix from statement 10.

The only reason why statements 9 and 10 tend to be written separated is due the conditions for matrix multiplication. Remember that matrix multiplication is not commutative, and so, it is not intuitive for matrices and to be the same. Nevertheless, given that we are talking about an inverse matrix, this is an special case in which matrix multiplication happens to be commutative, and we have talked about this in past lessons. This particular counter-intuitive (and yet, obvious after studying past lessons) dependence of statement 9 and 10, is a clear example on how all of the conditions listed in the theorem are codependent of one another, and why it is very important that if one of them does not fit with the matrix given, it means none of them will.

In summary, what the invertible matrix theorem means is that if we define an invertible matrix, this matrix MUST meet all of the other properties mentioned throughout the theorem. This is due the relationship of each of these characteristics of a square matrix with each other, in other words, the invertible matrix theorem lists a set of properties of square matrices which depend on one another, and thus, if one happens in a matrix, all of the rest of them will do too.

This is very useful when proving a matrix is invertible, for example, if we want to obtain the inverse of a 2x2 matrix without using the long methods described in past lessons, we can just check the matrix in question against all of the statements in the theorem. If we can easily deduct that one of them is met, this will be what makes a matrix invertible since all of the other statements from the theorem will be true too.

While determining if a matrix is invertible, or while inverting a matrix itself, if you are ever in the situation where, with a given matrix, you find that some of the invertible matrix theorem properties are met and some of them are not, go back and re-check all of your work since this means there must be a mistake in the calculations and they need to be corrected.

Proof of invertible matrix theorem In order to show proof of the invertible matrix theorem we will work through a variety of cases where you can use the 10 selected statements to deduct when is a matrix invertible and when is not. These invertible matrix theorem examples are much simpler than our usual problem exercises and often, will not require mathematical calculations, just simple deduction.

Example 1

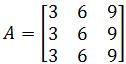

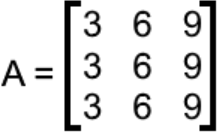

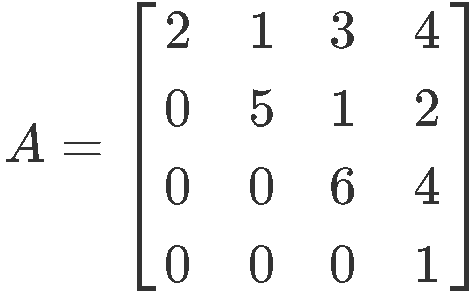

Given matrix as defined below:

Is an invertible matrix?

Instead of trying to invert matrix A by methods such as the row operation reduction which can be quite long, let us look at what the invertible theorem says and check for one of its statements to be useful.

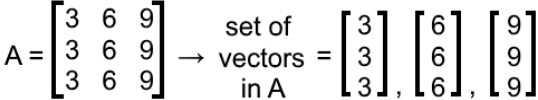

If we look at statement number 5: The columns of form a linearly independent set. we can quickly observe if can be inverted or not. So, let us obtain the column vectors which conform matrix :

As you can see, the vectors in the set are multiples of each other, for example if you multiply the first vector by 2, you obtain the second vector; if you multiply the first vector by 3 you obtain the third vector. Therefore, these vectors are NOT linearly independent with each other, and so the statement number 5 from the theorem does not hold in this case.

If statement 5 is not true for this matrix , then none of them are, therefore is a non invertible matrix.

Example 2

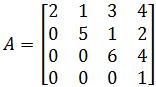

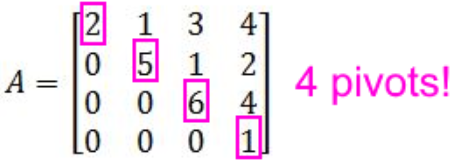

Is the following matrix invertible? Use as few calculations as possible.

If you notice on this case the 4x4 matrix is in echelon form, so we can quickly use statement 3 to check for invertibility. Statement 3 says: has n pivot positions.

For this case, , and if you look at equation 6, has 4 pivot positions:

Therefore, is invertible because statement 3 holds true, and that means statement 1 also holds true.

Example 3

Assume that is a square matrix. Determine is the following statements are true of false:- If is an invertible matrix, then the linear transformation → maps onto

This statement is true since is saying that if statement 1 holds true, then statement 8 holds true too.

- If there is an matrix such that , then there is an matrix such that .

This statement is true since is saying that if statement 9 holds true, then statement 10 holds true too.

- If the equation has only the trivial solution, then is not invertible.

False, this statement is saying that if statement 4 is true, then statement 1 is false, which is not possible, either all of the statements in the invertible matrix theorem are met, or not of them are.

- If the equation has a non-trivial solution, then has less than pivots.

The first part of this statement is opposite to statement 4 from the theorem, therefore this means the matrix in this case is not invertible. The second part of this statement is also contrary to what is said in another statement from the theorem (this case statement 3), and thus this aso means that the matrix for this case is not invertible. Since both parts of the statement are contrary to the invertible matrix theorem, then this is possible, and so it is true.

To finish up this lesson we recommend you to take a look at the next handout where you will find a complete summary on the inverse of a matrix and which can be useful for your independent studies. To add to your resources on the properties of invertible matrices, visit this last link for an explained example on how to use the invertible matrix theorem.

So this is it for the lesson of today, well see you in our next one!

The Invertible Matrix Theorem states the following:

Let be a square matrix. Then the following statements are equivalent. That is, for a given , the statements are either all true or all false.

1. is an invertible matrix.

2. is row equivalent to the identity matrix.

3. has pivot positions.

4. The equation has only the trivial solution.

5. The columns of form a linearly independent set.

6. The equation has at least one solution for each in .

7. The columns of span .

8. The linear transformation → maps onto .

9. There is an matrix such that .

10. There is an matrix such that .

There are extensions of the invertible matrix theorem, but these are what we need to know for now. Keep in mind that this only works for square matrices.

Let be a square matrix. Then the following statements are equivalent. That is, for a given , the statements are either all true or all false.

1. is an invertible matrix.

2. is row equivalent to the identity matrix.

3. has pivot positions.

4. The equation has only the trivial solution.

5. The columns of form a linearly independent set.

6. The equation has at least one solution for each in .

7. The columns of span .

8. The linear transformation → maps onto .

9. There is an matrix such that .

10. There is an matrix such that .

There are extensions of the invertible matrix theorem, but these are what we need to know for now. Keep in mind that this only works for square matrices.