Instead of finding the least squares solution of , we will be finding it for where

→ design matrix

→ parameter vector

→ observation vector

Least-Squares Line

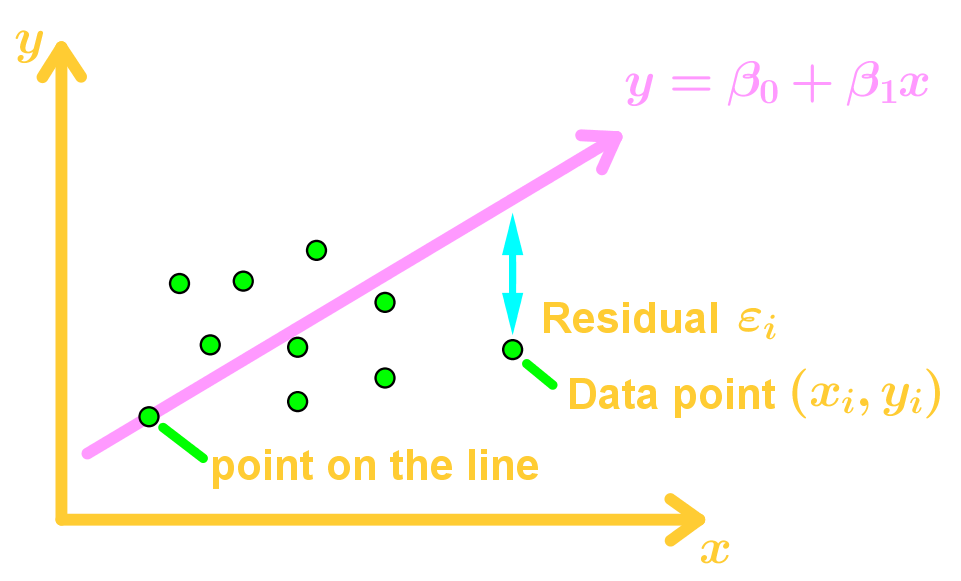

Suppose we are given data points, and we want to find a line that best fits the data points. Let the best fit line be the linear equation

And let the data points be . The graph should look something like this:

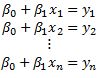

Our goal is to determine the parameters and . Let's say that each data point is on the line. Then

This is a linear system which we can write this as:

Then the least squares solution to will be .

General Linear Model

Since the data points are not actually on the line, then there are residual values. Those are also known as errors. So we introduce a vector called the residual vector , where

→

Our goal is to minimize the length of (the error), so that is approximately equal to . This means we are finding a least-squares solution of using .

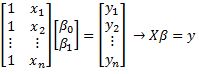

Least-Squares of Other Curves

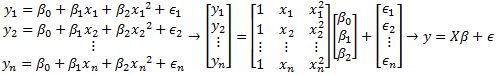

Let the data points be and we want to find the best fit using the function , where are parameters. Technically we are using a best fit quadratic function instead of a line now.

Again, the data points don't actually lie on the function, so we add residue values where

Since we are minimizing the length of , then we can find the least-squares solution using . This can also be applied to other functions.

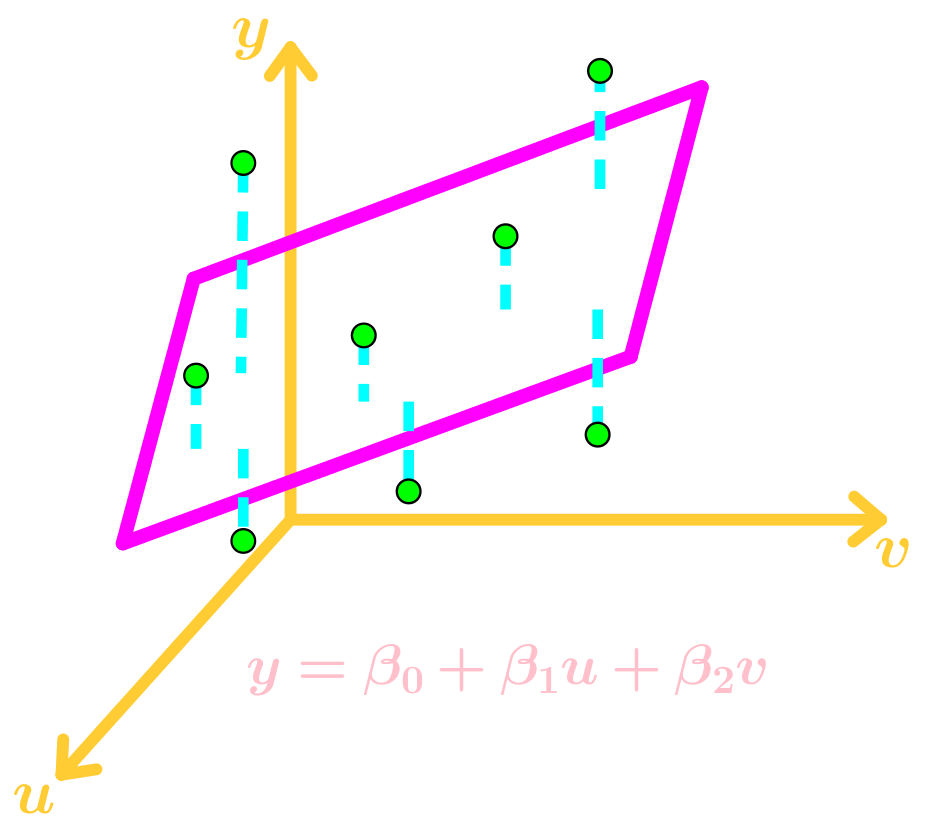

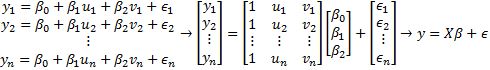

Multiple Regression

Let the data points be and we want to use the best fit function , where are parameters.

Again, the data points don't actually lie on the function, so we add residue values where

Since we are minimizing the length of , then we can find the least-squares solution using . This can also be applied to other multi-variable functions.