Orthogonal sets

What does orthogonal mean?

Remember from our past lesson on the inner product, length and orthogonality that we defined two vectors as orthogonal if they were perpendicular to each other (in general, any orthogonal lines happen to be lines which are perpendicular, and so, orthogonal vs perpendicular is just the same). For linear algebra we focus on the concept in the sense that we are now not only thinking in two dimensions (thus orthogonal lines) but we want to expand our view into three dimensions (due to the scenarios which can be encountered in our world given that we live in a tridimensional space), and so we start using the word orthogonal meaning the perpendicularity of not only lines but planes and spaces, opening the field for the mathematical calculations we can compute and the cases that can be studied.

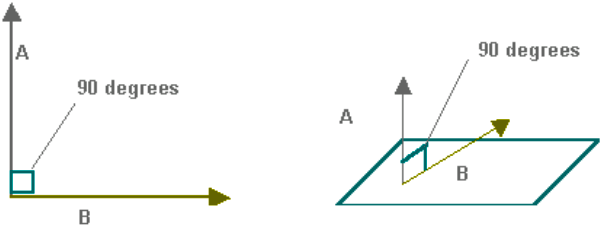

In the next diagram, you can see an example of orthogonal vectors:

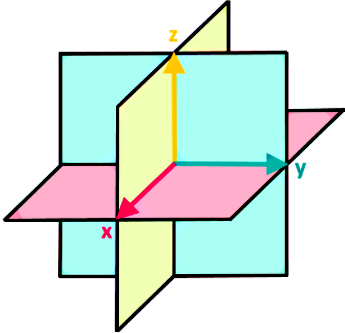

Mathematically speaking, orthogonal means that when you compute the inner product of two vectors, the result must be zero for the vectors to be orthogonal. For example, if any set of two vectors each lying in a different plane (without being an axis for the panes) of the orthogonal planes shown below, will be automatically orthogonal since their components can be observed to be orthogonal.

Before we continue, let us remind you that the inner product of two vectors is the same as the dot product, or scalar product between two vectors, and thus, we will be using these terms interchangeably during the lesson.

How to find orthogonal vector set

So, answer quickly: how to tell if two vectors are orthogonal to each other? The dot product! Keep this fresh in your head!

To formally define orthogonal sets with this in mind: A set of vectors {} in are orthogonal sets if each pair of vectors from the set are orthogonal to each other. And this means that the inner product (dot product) of the vectors within the set is equal to zero as:

Note that for equation 1, the subindexes and cannot be equal, so this equation works when ≠ since them being equal would mean that we are multiplying the vector with itself and would result in the magnitude of the vector squared.

With this in mind, if the set of vectors {} in is an orthogonal set, then this is enough to prove linear independence for these vectors. Thus, the vectors form a basis for a subspace S, and this basis is what we call the orthogonal basis.

To check if a set is an orthogonal basis in , we can simply verify if this is an orthogonal set.

Remember from our lesson about the linear combination and vector equations that a linear combination of vectors is defined as:

Where 1,2, and the constants can be calculated using the formula:

Since it is said that an orthogonal set of vectors is a set where all the vectors are linearly independent, we can use this formula for a linear combination to solve for the vector defined in equation 2. Therefore, for finding orthogonal vectors within a set, being able to define a set as orthogonal and find the orthogonal basis, we need to follow the next steps:

- Determine if the vectors given in the set are orthogonal by computing the dot products of them by pairs.

- If the result of each pair of vectors dot product was zero, then the vector set is an orthogonal set.

- If the set is orthogonal, then is forms and orthogonal basis.

- We can prove this by writing the vector of the linear combination containing the vectors from the set.

We will take a look at a few examples of such operations in our next section. For exercise example 1 we will need to follow only steps 1 and 2; while for exercise example 2 we will need to follow all three steps, it just depends on whatever you are asked but the process itself is pretty straightforward.

Now, what happens if you are asked for an orthonormal basis? Notice: NOT orthogonal.

Well, a set of vectors {} is an orthonormal set if is an orthogonal set of unit vectors, in other words, all of the vectors within the set must be orthogonal to each other, plus, they must all have a magnitude (length) of one. Having a subspace spanned by such orthonormal set we say that the vectors inside of it form an orthonormal basis, and this is easily understood because we already know that all of the vectors inside the set are linearly independent of each other since this is a condition for an orthogonal set.

Following with the definition of orthonormal, we can check if the column vectors belonging to the columns of a matrix are orthonormal by performing the next matrix multiplication:

Where is a matrix with columns that are orthonormal vectors. And is the identity matrix.

With equation 4 in mind, if we have this matrix containing orthonormal columns and a pair of vectors and in , then we have the following three equation identities to be true:

Now that you know what is orthogonal (and non orthogonal for that matter), how to tell if two vectors are orthogonal and even know how to find orthogonal vectors and sets of them, it is time we work through a few examples.

Finding orthogonal vectors

During the next four problems we will use our knowledge on the orthogonal definition applied to vectors, sets, basis and then how it develops into orthonormality. So have fun solving the exercises!

Example 1

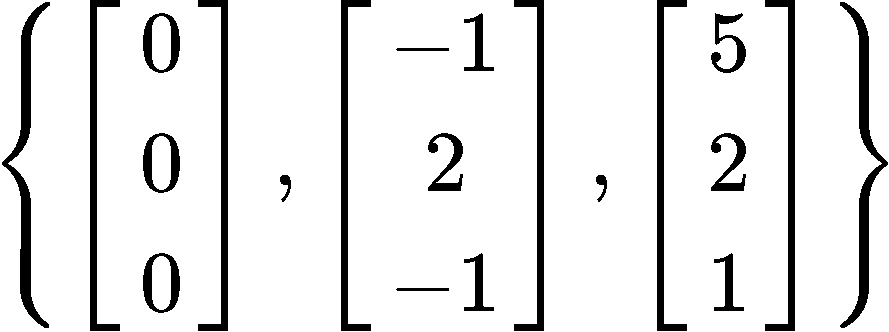

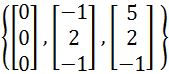

Having a set of three vectors as shown below:

Is this an orthogonal set?

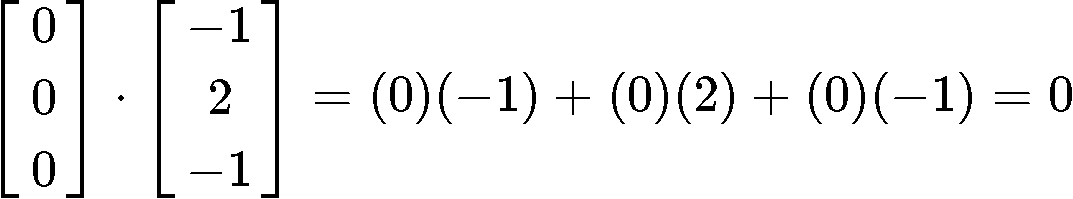

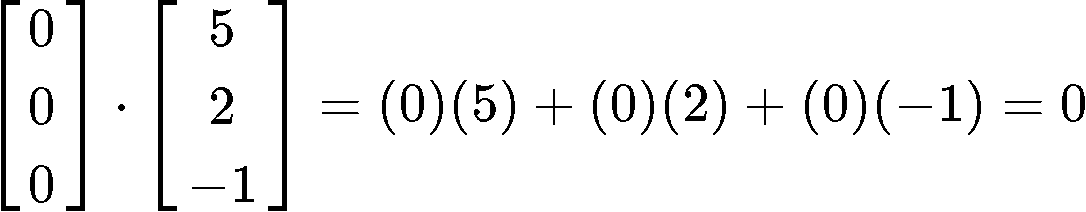

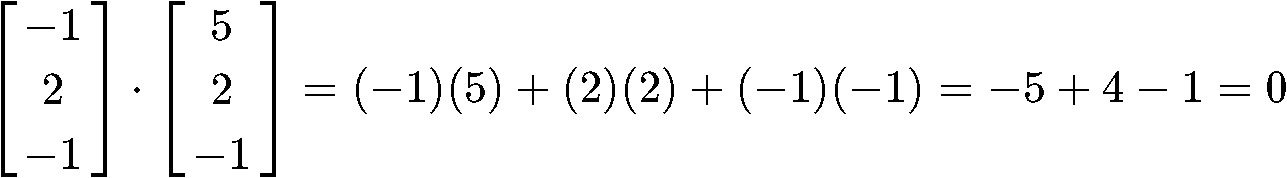

In order for us to prove if the set is orthogonal, we have to determine whether the given vectors are orthogonal, parallel, or neither. And how do we do that? With the dot product! Remember that the dot product of orthogonal vectors is equal to zero, therefore, we just have to obtain the dot product of all of these vectors with each other and see the results.

Since all of the dot product operations resulted in zeros, then it means that the three vectors in the set are all orthogonal to each other, and therefore, this is an orthogonal set of vectors.

Example 2

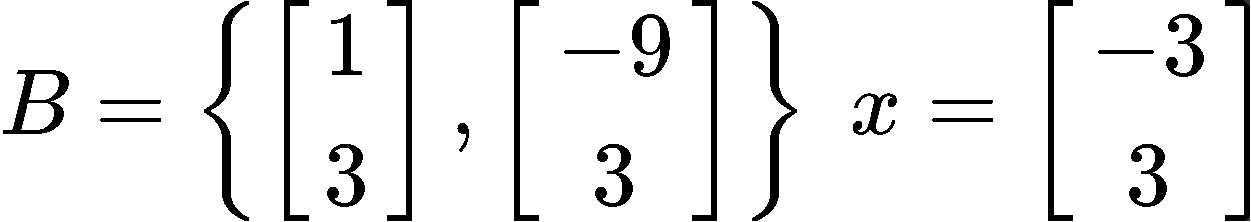

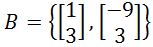

Given the set and the column vector as defined below:

Verify that is an orthogonal basis for , and then express as a linear combination of the set of vectors in .

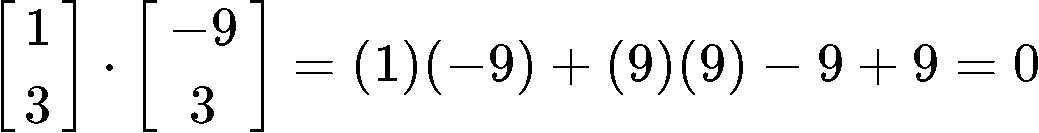

So we start by verifying the orthogonality of and , the two vectors in the set called ; for that, we get the dot product :

A result of zero for the dot product tells us that the set is a set of orthogonal vectors.

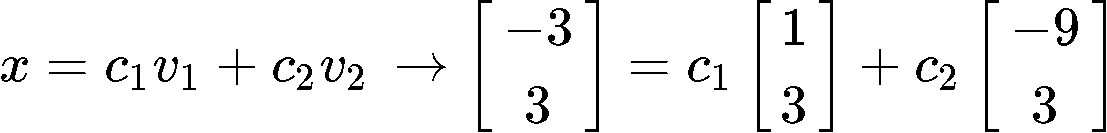

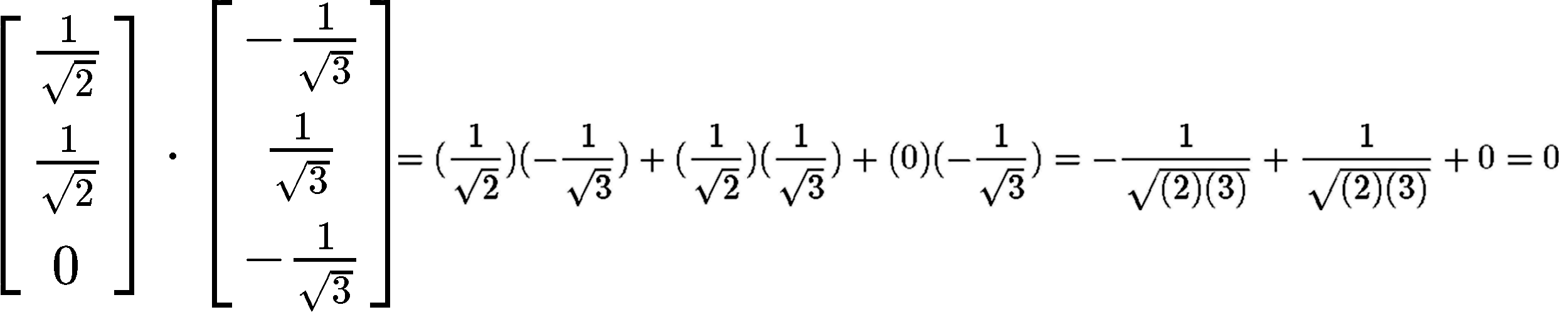

Then, our next step is to express as a linear combination of the set of vectors in . Using equation 2 we write the linear combination of the vectors from set and express it as vector :

All that is left to complete this expression is to find the values of the constants . Using equation 3 we have that:

And so, we rewrite the linear combination including the found values of and :

Example 3

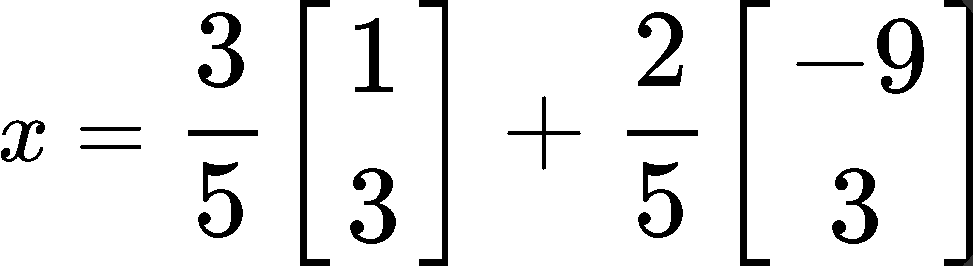

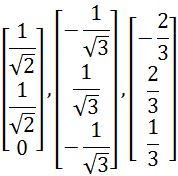

Having set of vectors (as defined below), is set an orthonormal basis for ?

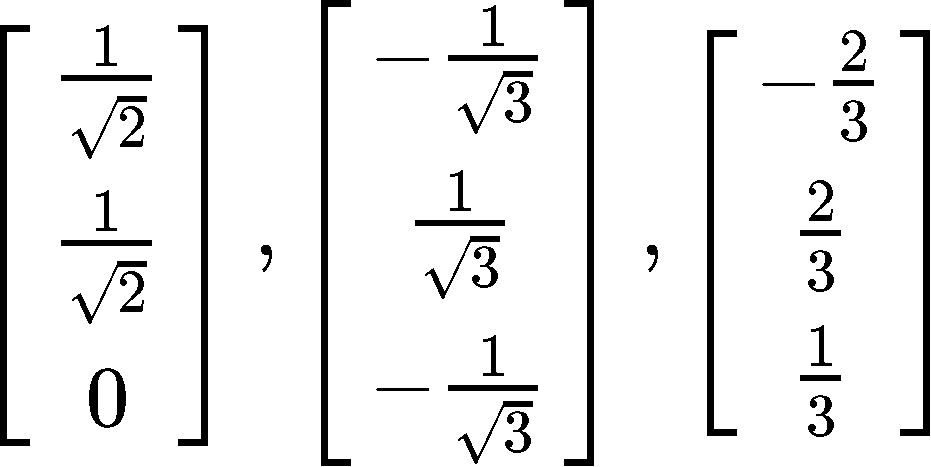

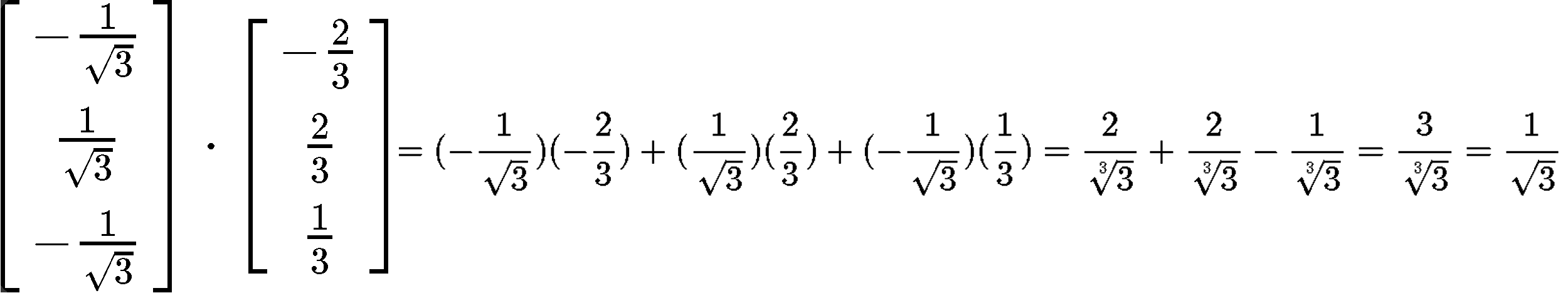

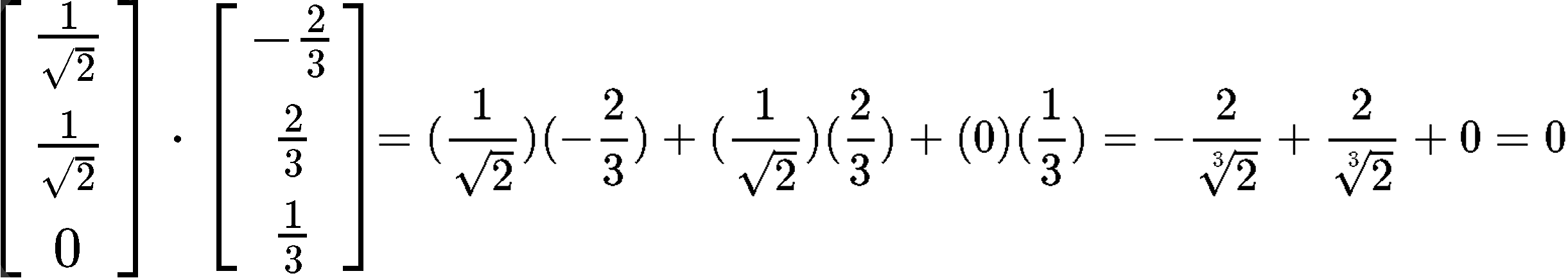

In order to prove that this is an orthonormal basis for , we need to prove this is an orthonormal set. Remember that a set of orthonormal vectors is that which is composed of vectors which are orthogonal to each other and at the same time, they are all unit vectors. So, let us prove the orthogonality of the vectors in the set first:

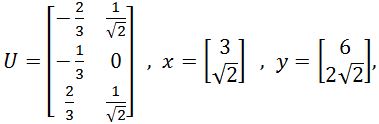

Example 4

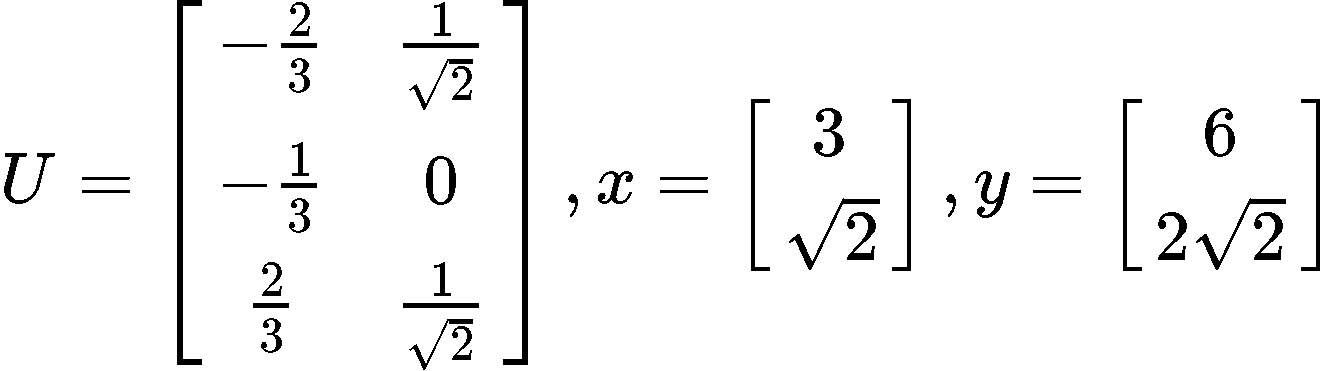

Let , and be as defined below, where has orthonormal columns and .

Now, verify that:

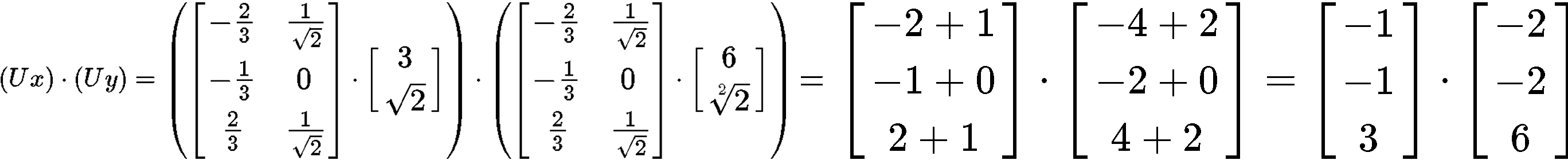

We will solve this problem by parts, the left hand side and the right hand side. Since the right hand side will be shorter to solve, lets start by doing that dot product first:

Now working through the right hand side of the equation to verify:

Notice the results from both sides of equation 15 yield the value of 22, and therefore, the expression is proved correct.

Now that you have learnt about orthogonality and vector sets with this characteristic, we recommend you to visit this lesson on orthogonal sets which includes a variety or detailed problems that can supplement your studies.

In our next lesson we will introduce the concept of orthogonal projection, which of course is closely tied to the definitions we saw during this lesson. So we hope that you have enjoyed our lesson of today, and this is it, see you in the next one!

A set of vectors {} in are orthogonal sets if each pair of vectors from the set are orthogonal. In other words,

Where .

If the set of vectors {} in is an orthogonal set, then the vectors are linearly independent. Thus, the vectors form a basis for a subspace . We call this the orthogonal basis.

To check if a set is an orthogonal basis in , simply verify if it is an orthogonal set.

Are calculated by using the formula:

where .

A set {}is an orthonormal set if it's an orthogonal set of unit vectors.

If is a subspace spanned by this set, then we say that {} is an orthonormal basis. This is because each of the vectors are already linear independent.

A matrix has orthonormal columns if and only if .

Let be an matrix with orthonormal columns, and let and be in . Then the 3 following things are true:

1)

2)

3) if and only if

Consider to be the subspace spanned by the vector . Then the orthogonal projection of onto is calculated to be:

proj

The component of orthogonal to (denoted as ) would be:

If the set of vectors {} in is an orthogonal set, then the vectors are linearly independent. Thus, the vectors form a basis for a subspace . We call this the orthogonal basis.

To check if a set is an orthogonal basis in , simply verify if it is an orthogonal set.

Are calculated by using the formula:

A set {}is an orthonormal set if it's an orthogonal set of unit vectors.

If is a subspace spanned by this set, then we say that {} is an orthonormal basis. This is because each of the vectors are already linear independent.

A matrix has orthonormal columns if and only if .

Let be an matrix with orthonormal columns, and let and be in . Then the 3 following things are true:

1)

2)

3) if and only if

Consider to be the subspace spanned by the vector . Then the orthogonal projection of onto is calculated to be:

The component of orthogonal to (denoted as ) would be:

is an orthogonal basis for

is an orthogonal basis for  as a linear combination of the set of vectors in

as a linear combination of the set of vectors in

where

where  and

and  . Write

. Write